April 17, 2021, was a day like any other day on the sun, until a brilliant flash erupted and an enormous cloud of solar material billowed away from our star. Such outbursts from the sun are not unusual, but this one was unusually widespread, hurling high-speed protons and electrons at velocities nearing the speed of light and striking several spacecraft across the inner solar system.

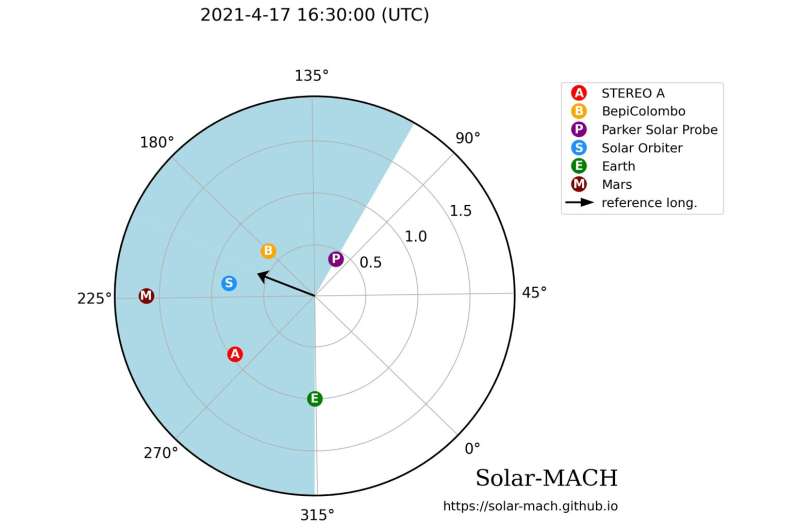

In fact, it was the first time such high-speed protons and electrons—called solar energetic particles (SEPs)—were observed by spacecraft at five different, well-separated locations between the sun and Earth as well as by spacecraft orbiting Mars. And now these diverse perspectives on the solar storm are revealing that different types of potentially dangerous SEPs can be blasted into space by different solar phenomena and in different directions, causing them to become widespread.

“SEPs can harm our technology, such as satellites, and disrupt GPS,” said Nina Dresing of the Department of Physics and Astronomy, University of Turku in Finland. “Also, humans in space or even on airplanes on polar routes can suffer harmful radiation during strong SEP events.”

Scientists like Dresing are eager to find out where these particles come from exactly—and what propels them to such high speeds—to better learn how to protect people and technology in harm’s way. Dresing led a team of scientists that analyzed what kinds of particles struck each spacecraft and when. The team published its results in the journal Astronomy & Astrophysics.

Currently on its way to Mercury, the BepiColombo spacecraft, a joint mission of ESA (the European Space Agency) and JAXA (Japan Aerospace Exploration Agency), was closest to the blast’s direct firing line and was pounded with the most intense particles. At the same time, NASA’s Parker Solar Probe and ESA’s Solar Orbiter were on opposite sides of the flare, but Parker Solar Probe was closer to the sun, so it took a harder hit than Solar Orbiter did.

Next in line was one of NASA’s two Solar Terrestrial Relations Observatory (STEREO) spacecraft, STEREO-A, followed by the NASA/ESA Solar and Heliospheric Observatory (SOHO) and NASA’s Wind spacecraft, which were closer to Earth and well away from the blast. Orbiting Mars, NASA’s MAVEN and ESA’s Mars Express spacecraft were the last to sense particles from the event.

Altogether, the particles were detected over 210 longitudinal degrees of space (almost two-thirds of the way around the sun)—which is a much wider angle than typically covered by solar outbursts. Plus, each spacecraft recorded a different flood of electrons and protons at its location. The differences in the arrival and characteristics of the particles recorded by the various spacecraft helped the scientists piece together when and under what conditions the SEPs were ejected into space.

These clues suggested to Dresing’s team that the SEPs were not blasted out by a single source all at once but propelled in different directions and at different times potentially by different types of solar eruptions.

“Multiple sources are likely contributing to this event, explaining its wide distribution,” said team member Georgia de Nolfo, a heliophysics research scientist at NASA’s Goddard Space Flight Center in Greenbelt, Maryland. “Also, it appears that, for this event, protons and electrons may come from different sources.”

The team concluded that the electrons were likely driven into space quickly by the initial flash of light—a solar flare—while the protons were pushed along more slowly, likely by a shock wave from the cloud of solar material, or coronal mass ejection.

“This is not the first time that people have conjectured that electrons and protons have had different sources for their acceleration,” de Nolfo said. “This measurement was unique in that the multiple perspectives enabled scientists to separate the different processes better, to confirm that electrons and protons may originate from different processes.”

In addition to the flare and coronal mass ejection, spacecraft recorded four groups of radio bursts from the sun during the event, which could have been accompanied by four different particle blasts in different directions. This observation could help explain how the particles became so widespread.

“We had different distinct particle injection episodes—which went into significantly different directions—all contributing together to the widespread nature of the event,” Dressing said.

“This event was able to show how important multiple perspectives are in untangling the complexity of the event,” de Nolfo said.

These results show the promise of future NASA heliophysics missions that will use multiple spacecraft to study widespread phenomena, such as the Geospace Dynamics Constellation (GDC), SunRISE, PUNCH, and HelioSwarm. While single spacecraft can reveal conditions locally, multiple spacecraft orbiting in different locations provide deeper scientific insight and offer a more complete picture of what’s happening in space and around our home planet.

It also previews the work that will be done by future missions such as MUSE, IMAP, and ESCAPADE, which will study explosive solar events and the acceleration of particles into the solar system.